|

Han-Byul Kim I'm a research engineer at Apple, where I work on making machine learning efficient. I got my Ph.D at Computer Science & Engineering, Seoul National University under the supervision of professor Sungjoo Yoo. I'm passionate about making machine learning faster and lighter. I mostly focus on optimizing neural networks on deployment in the aspect of algorithms and model architectures targeting system and hardware implementation. My researches explore techniques like quantization and neural architecture search to achieve this. Additionally, I work on co-designing projects for deploying optimized neural networks on FPGAs using RTL design. |

Experience |

|

Research Engineer, Apple Jan. 2025 - Now |

|

Research Intern, Apple Apr. 2024 - Sep. 2024, Seattle, WA, United States Full time PhD intern in MIND (Machine Intelligence & Neural Design) team, AIML |

|

Student Researcher, Google Oct. 2022 - Dec. 2023, Seoul, Korea Full time PhD intern in Model optimization team, CoreML |

Research |

|

SPD: Sync-Point Drop for efficient tensor parallelism of Large Language Models

Han-Byul Kim, Duc Hoang, Arnav Kundu, Mohammad Samragh, Minsik Cho International Conference on Machine Learning (ICML), 2025 #Distributed_Inference, #Tensor_Parallelism |

|

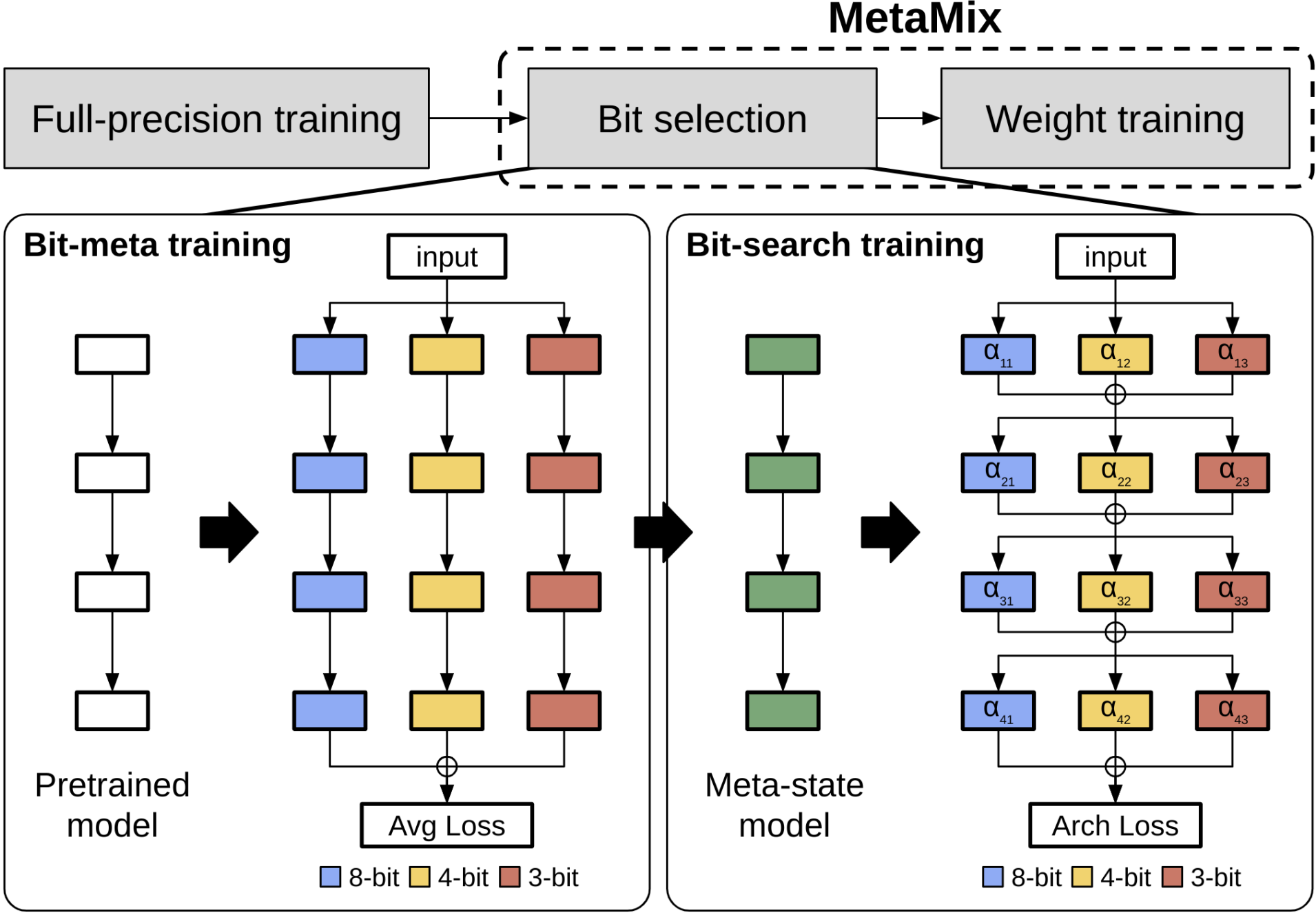

MetaMix: Meta-state Precision Searcher for Mixed-precision Activation Quantization

Han-Byul Kim, Joo Hyung Lee, Sungjoo Yoo, Hong-Seok Kim AAAI Conference on Artificial Intelligence (AAAI), 2024 #Quantization, #Neural_Architecture_Search, #Mixed_Precision |

|

JaxPruner: A concise library for sparsity research

Joo Hyung Lee, Wonpyo Park, Nicole Mitchell, Jonathan Pilault, Johan Obando-Ceron, Han-Byul Kim, Namhoon Lee, Elias Frantar, Yun Long, Amir Yazdanbakhsh, Shivani Agrawal, Suvinay Subramanian, Xin Wang, Sheng-Chun Kao, Xingyao Zhang, Trevor Gale, Aart Bik, Woohyun Han, Milen Ferev, Zhonglin Han, Hong-Seok Kim, Yann Dauphin, Gintare Karolina Dziugaite, Pablo Samuel Castro, Utku Evci Conference on Parsimony and Learning (CPAL), 2024#Sparsity, #Quantization, #Jax |

|

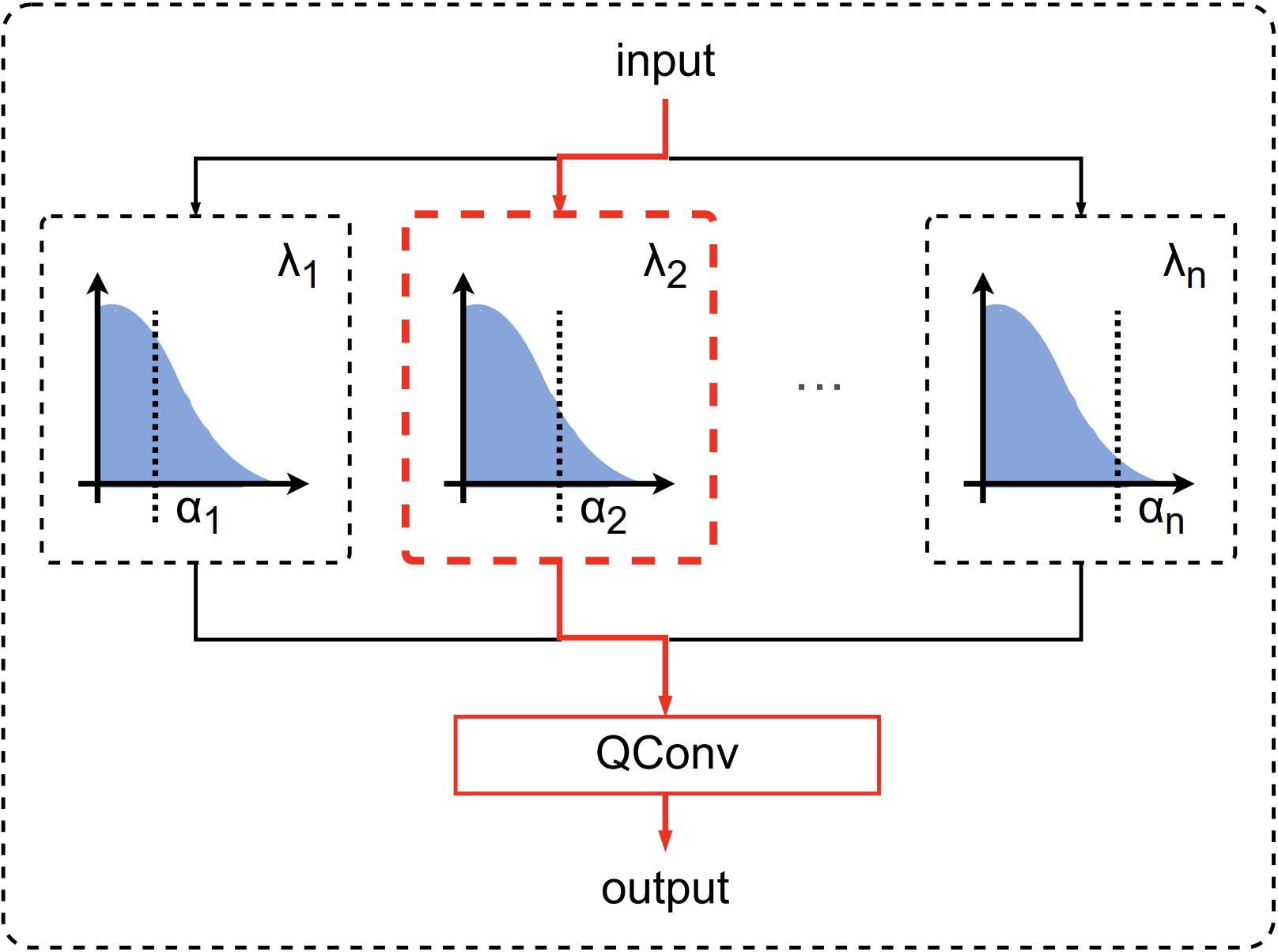

BASQ: Branch-wise Activation-clipping Search

Quantization for Sub-4-bit Neural Networks

Han-Byul Kim, Eunhyeok Park, Sungjoo Yoo European Conference on Computer Vision (ECCV), 2022#Quantization, #Neural_Architecture_Search, #Single_Precision |

For more details, please visit my LinkedIn |

|

Webpage source: https://github.com/jonbarron/jonbarron_website. |